If you’re looking to optimize software and it processes real values, then there’s a very good chance that switching the data to Fixed Point arithmetic will save you execution time and energy.

Representation of Real Numbers

Floating Point Arithmetic: Definition

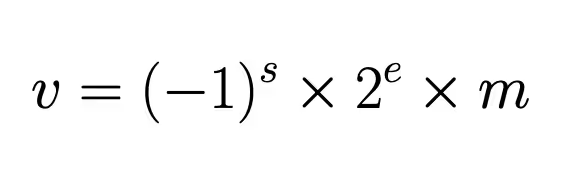

In computing, the data type most commonly used to represent real numbers is the float. This data type is defined by the IEEE 754 standard, which specifies the calculations and behavior of the float type. According to the IEEE-754 standard, a float represents a number in three parts:

- Its sign s

- Its exponent e

- Its mantissa m

The value of the number is then obtained using the following formula from IEEE 754 [08]:

The IEEE 754 standard allows numbers to be represented with different exponent values, adapting to the values represented at runtime. Using the standard is relatively simple for developers since numbers can be written directly in their decimal form, and the standard manages arithmetic errors such as overflow or division by zero.

Floating-point arithmetic requires the implementation of specific arithmetic operations. Initially, these operations were handled in software, forcing developers to program them manually.

Floating Point Hardware: FPUs and Performance

But starting in the 1950s, processors began to include FPUs (Floating Point Units — coprocessors dedicated to floating-point arithmetic), allowing fast floating-point calculations without developer intervention.

Advances made by hardware manufacturers in the field of FPUs now make it possible to use float types on many computers with very low computation times.

Fixed Point Artithmetic: Definition

Although the float representation is used most often, there are other formats, including fixed-point representation. Fixed point is an older format than floating point, used before the advent of standards and electronic components that enabled floating-point arithmetic. However, this format is still used when the hardware target running the software does not have an FPU.

Fixed Point Representation Formats

Fixed-point representation represents a real number in the same way as an integer. An arbitrary size is set for the integer part and the fractional part (fixing the position of the “point”), the integer part is represented as an integer, and the fractional part by representing inverse powers of 2 (2⁻¹, 2⁻², etc.) [Yates]. Fixed-point representation therefore encompasses several formats. For example, on 32 bits, one can represent real numbers using a Q0.31 fixed-point representation — with 1 sign bit, no integer part, and a 31-bit fractional part. Alternatively, a Q15.16 format may be used — 1 sign bit, 15 integer bits, and 16 fractional bits.

Converting Decimal to Fixed Point

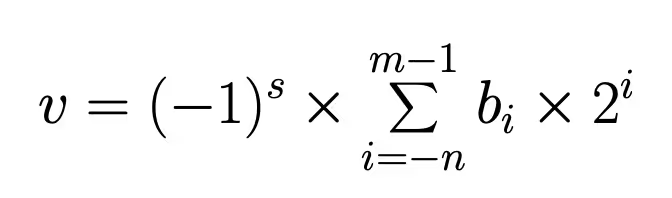

To recover the value of a fixed-point number, we apply the following formula:

Where:

- v is the value of the number,

- s is the sign bit (0 for positive, 1 for negative),

- m is the size of the integer part,

- n is the size of the fractional part of our representation,

- and bᵢ is the value of the bit at index i in the fixed-point representation.

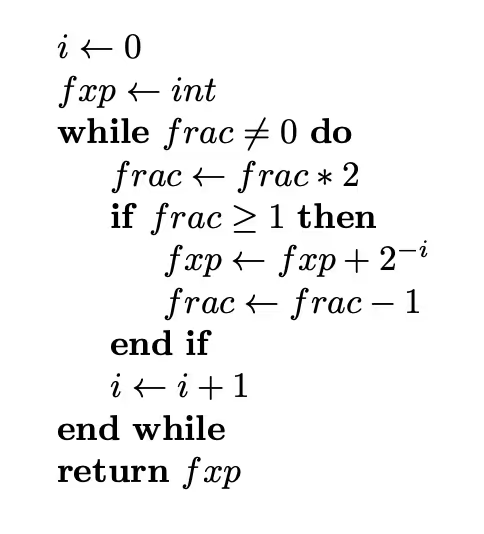

To convert a decimal number into its fixed-point equivalent, we apply the following algorithm:

Where:

- fxp stores the fixed-point representation,

- int is the integer part of the decimal number,

- frac is the fractional part of the decimal number.

To understand why floating point is often preferred over fixed point, we need to compare the range of values representable by each.

Understanding Dynamic Range: Floating vs Fixed Point

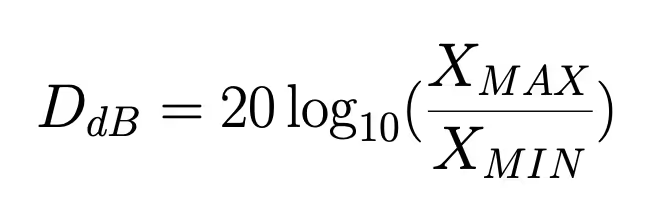

To measure the range of values a variable can represent, we use a quantity called dynamic range. The dynamic range is the value expressed in decibels of the ratio between the largest achievable value and the smallest possible value. The formula for dynamic range is therefore as follows, where XMAX is the maximum representable amplitude and XMIN the minimum representable amplitude.

The value of the dynamic range therefore depends on the size of the exponent in the representation format. For example, let’s take the float data type, which represents real numbers on 32 bits with 1 sign bit, 8 exponent bits, and 23 mantissa bits. We can estimate the dynamic range as follows: with 8 bits to represent the exponent, the largest possible exponent value is 2⁸ – 1 = 127, and the smallest is –127. The ratio XMAX / XMIN is therefore 2^(2×127 + 1).

The dynamic range of the float data type (IEEE 754) is therefore 1535 (pretty good! Well… it depends what you compare it to).

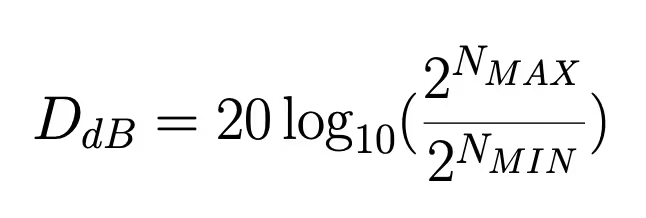

If we compare it with the dynamic range of fixed-point representation, we see that the dynamic range evolves linearly with the number of bits used to encode the fixed-point variable. If we denote NMAX as the index of the most significant bit and NMIN as the index of the least significant bit, we obtain the following formula: ✅

Pour 32 bits, on a donc une gamme dynamique en décibels de 186. On est loin des 1535 du format float.

Advantages of Fixed Point

Despite having a smaller dynamic range, fixed point allows the use of binary and integer operations, which often consume less energy than float operations for the same calculation.

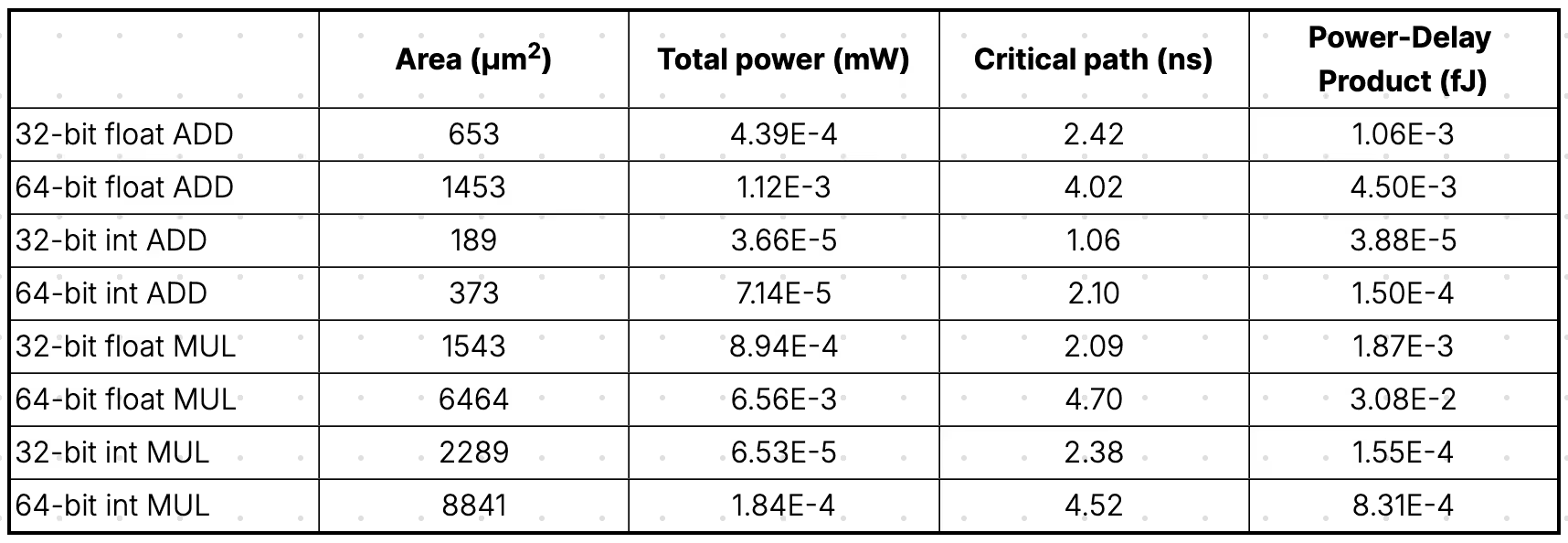

The table below details these performance differences for addition and multiplication operations on ac_int and ac_float types (32 and 64 bits) on an ASIC target (a Fully Depleted Silicon On Insulator, FDSOI 28nm). For each operation, we compare:

- The area used by the operation

- The total power consumption

- The critical path (latency)

- The Power-Delay Product (PDP), i.e., the energy required for the operation

The results show that moving to fixed-point arithmetic reduces the latency of addition operations (32 and 64 bits) and multiplication (64 bits). It also reduces energy consumption for both addition and multiplication, regardless of data size.

Key Takeaways on Fixed Point

By switching to fixed-point:

- Some arithmetic instructions (like addition) require fewer cycles than their floating-point equivalents — though this is not true for all operations (e.g., multiplication can be longer in fixed-point).

- Fixed-point operations consume less energy overall.

Converting Floating Point Code to Fixed Point: Best Practices

When converting code to fixed point, it cannot adapt to the orders of magnitude of the values taken by the variable at runtime, unlike floating point. Therefore, it is necessary to know in advance what values the variable will take before executing the code.

Dynamic Analysis of Variables

At WedoLow, we perform a dynamic analysis of floating-point variables in the program we want to optimize. We instrument these variables by replacing their type with one that records all the values taken by the variable during program execution.

There are many ways to determine the order of magnitude of values that variables will take during execution, but it is better to choose a method you can automate (especially if you plan to convert a large number of variables). The method should produce a readable and understandable result (simply printing every value of a variable in the console may not be very practical for your eyes) and should also be exploitable.

Defining Optimal Representation Formats

At the end of this dynamic analysis, we can define the fixed-point representation format each variable will take.

⚠️ Be careful, because a poorly adapted representation format can cause precision errors (loss of least significant bits if the integer part is larger than necessary) or overflow (loss of most significant bits if the fractional part is too large).

Also, avoid too frequently performing operations between variables of different formats, as this requires adding shifts. These shifts generate extra instructions in the machine code and, as a result, increase execution time.

To avoid too many shifts in fixed-point code, variables that interact frequently should be grouped together and assigned the same representation format.

Balancing Precision and Execution Time

Thus, for each variable, you need to balance between precision and reducing the number of instructions.

For each variable, you can choose an optimal format based on the maximum exponent value the variable will take. Alternatively, you can split a variable into two for more precise representation, or, conversely, represent multiple variables with the same format to reduce execution time.

Verification and Error Checking in Fixed Point Code

Non-Regression Testing

At the end of the optimization, it is essential to verify that the optimized code still produces the same result as the original version. To do this, a non-regression test is performed with the same input data, and the difference between the output values is measured to calculate the error induced by the change in representation format.

⚠️ Be careful: fixed-point types do not necessarily include error-handling mechanisms like floats in the IEEE-754 standard. This means there are no NaN (Not a Number) values, division by zero is not managed, and it is up to the developer to add checks to avoid such errors.

Handling Exceptional Cases

Also note that in some (rare) cases, fixed-point arithmetic can actually be more accurate than floating point. In these situations, results must be compared against a more precise type (double precision or even multiple-precision types such as MPFR [MPFR] [MPFR2]).

Summary: Floating vs Fixed Point in Embedded Software

- Fixed-point optimization consists of changing the representation format of real numbers from floating point (which adapts to value magnitudes at runtime) to fixed point (which does not adapt and fixes a default order of magnitude for its representation).

- To define this order of magnitude, you must know in advance the values that variables will take during program execution.

- Once you can anticipate the ranges, you define the fixed-point representation formats. For each variable, you must balance between reducing execution time and maintaining result precision.

- Switching to fixed point changes the nature of the assembly instructions needed for arithmetic operations. These new instructions are shorter and consume less energy.

- Fixed point impacts the results of arithmetic operations involving real numbers, so it is necessary to compare the behavior of the optimized code with that of the original code.

Sources

- [Yates] YATES, Randy. Fixed-point arithmetic: An introduction. Digital Signal Labs, 2009, vol. 81, no. 83, p. 198.

- [MPFR] FOUSSE, Laurent, HANROT, Guillaume, LEFÈVRE, Vincent, et al. MPFR: A multiple-precision binary floating-point library with correct rounding. ACM Transactions on Mathematical Software (TOMS), 2007, vol. 33, no. 2, p. 13-es.

- [MPFR2] GNU MPFR 4.2.1 manual, https://www.mpfr.org/mpfr-current/mpfr.html#Top

- [Benjamin Barrois] Methods to evaluate accuracy-energy trade-off in operator-level approximate computing. Computer Arithmetic. Université de Rennes, 2017. English. ⟨NNT: 2017REN1S097⟩. ⟨tel-01665015v2⟩

- [08] IEEE Standard for Floating-Point Arithmetic. In: IEEE Std 754-2008 (2008), 1–70. doi: 10.1109/IEEESTD.2008.4610935.

.svg)

.svg)